The customer chose to protect their confidential information

A Data Flow Optimization Catalog for a Bank

About the client

In this business case, our customer was a banking entity. It provides financial services to its clients and is engaged in active market research efforts.

![[object Object] on the map](https://static.andersenlab.com/andersenlab/new-andersensite/bg-for-blocks/about-the-client/switzerland-desktop-2x.png)

Project overview

In this business case, our customer was a banking entity. It provides financial services to its clients and is engaged in active market research efforts. The customer requested us to create an internal search system that would allow them to navigate through a variety of documents: technical documents and tasks, articles from financial journals, instructions, operational documents, etc. The main challenge was all about the fact that the documents, accumulated over a long period of time, were stored in different repositories, including local ones. Hence the documents were difficult to find.

Project scope

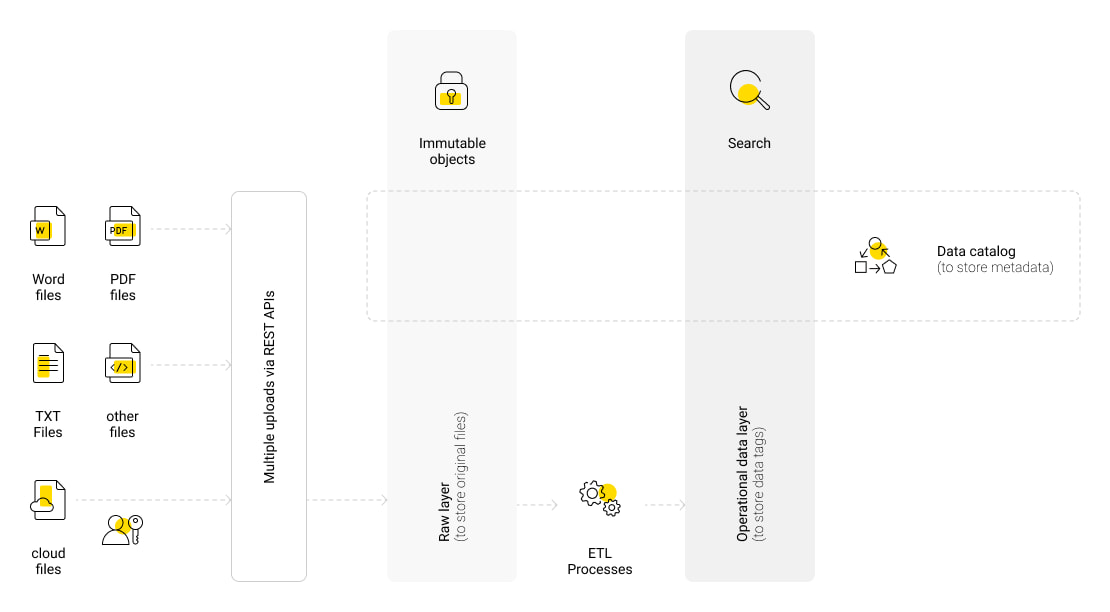

The logical diagram of the knowledge-sharing architecture

The customer's challenge was that their enormous collection of files was stored in different formats (Word, PDF, TXT, cloud, etc.). As such, it was poorly structured. The customer needed urgent assistance in the light of their plans to scale up tenfold. In case of rapid growth, data storage chaos could become a serious threat.

The solution proposed by our team has brought all data under a common stack of technology so that it's possible to load files via REST APIs on the raw layer, the key feature of which is immutability. Then, the files are uploaded to the repository, followed by ETL processing. The operational data layer generates a list of tags, lexemes, and terminology to be used for searching.

The approach ensured by our team can be implemented both via the client's servers and in the cloud.

There is a data catalog in place, running through two layers. The catalog is a storage service for the configuration metadata, with a raw layer file search path implemented. As a result, the data can be mapped in accordance with the terminology in the operational data layer.

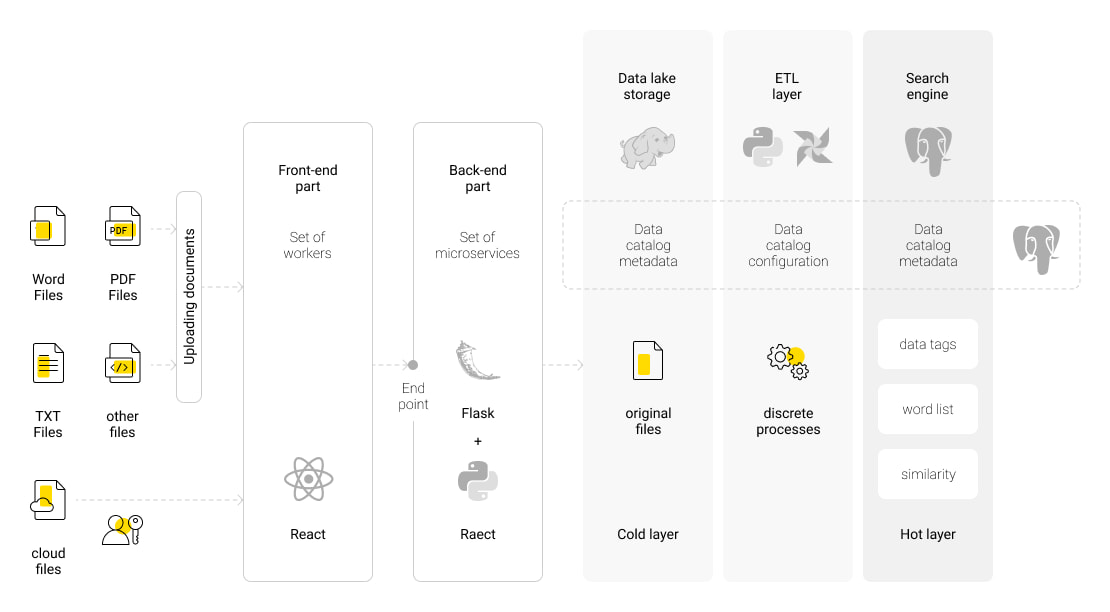

The physical layout of the knowledge-sharing architecture

Flask and Python were chosen to develop the back-end, React was used to develop the front-end, the Hadoop ecosystem was selected for the cold layer, and Python and Apache Airflow were used for the ETL Layer. The latter is responsible for scanning files and calculating the value of tokens and lexemes in these files.

After that, a list of words, similarities between documents, data tags, etc. was put together into the hot layer. The hot layer is represented by a PostgreSQL database.

There is a metadata catalog database fulfilling various functions depending on the layer:

- The cold layer stores information about the addresses of the original files in the Hadoop ecosystem;

- The ETL layer implements the configuration for Python and Apache Airflow, which processes these files and provides an understanding of their content (stop words, the word normalization process, etc.);

- The hot layer contains information about how to map search requests for the original files represented in this ecosystem.

Also, we built a set of servers for the Hadoop storage and a set of servers for the data catalog.

Architecture

The physical layout of the knowledge-sharing architecture

Andersen's team decided that SQL requests were the best-matching solution in this business case. The reason for such a decision was that this approach would enable users to interact with the repository through an API without using the React engine.

Via React, the UI allows the end-user to find necessary items using the search line.

After entering a word or a phrase, the user receives a table with links leading to original documents stored on Hadoop. Each document is downloadable by clicking on it.

As a result, the time needed to obtain information is less than one second.

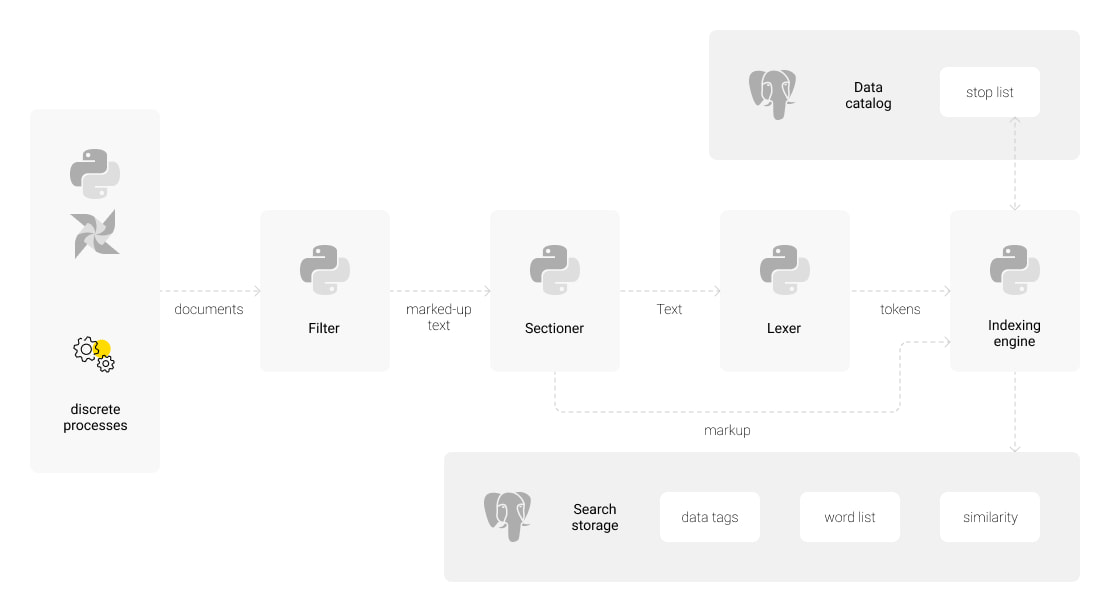

The physical scheme of the knowledge-sharing architecture

The diagram below describes the ETL process.

To implement this solution, we chose Python as it offers an impressive database of libraries to work with texts, including parsing and vocabulary normalization.

In the data catalog, a stop list can be found. It stores words that do not belong to a particular domain field.

Project results

The catalog solution built by our team of experts is notable for the following results:

- The implemented data flow optimization catalog has reduced the response time for information retrieval to a second;

- The new internal search system has boosted the user satisfaction rate to 97%;

- Andersen’s team has ensured the possibility to scale the solution tenfold without encountering data storage chaos;

- The designed system has made interaction and retrieval of documents easier through a user-friendly API and UI.

Let's talk about your IT needs

What happens next?

An expert contacts you after having analyzed your requirements;

If needed, we sign an NDA to ensure the highest privacy level;

We submit a comprehensive project proposal with estimates, timelines, CVs, etc.

Customers who trust us